One of my mantras about writing is that writing is thinking. Writing is simultaneously the expression and the exploration of an idea1. As we write, we are trying to capture an idea on the page, but in the act of that attempted capture, it’s likely (and even desirable) that the idea will change.

In my work on how we could teach writing better, I center writing as thinking as part of the writer’s practice (the skills, attitudes, knowledge, and habits of mind of writers) as a way to gauge what kind of activities may help us develop. If it involves thinking across any one or more of those dimensions, it’s probably worthwhile. If not, not.

I’ve been living this reality in a very concrete way lately, which gives me a handy example to illustrate what I mean when I say writing is thinking, but before I get too far into that, I’m at risk for burying the lead of this newsletter.

I sold my next book, as yet untitled, but on the subject of reading and writing in the age of artificial intelligence. It will be published by Basic Books, and all things going according to plan with the manuscript that is due from me in December, books will be available sometime in early 2025, the specific date TBD.

I’m very excited, and also anxious because that deadline is reasonably close and I want the book to be as interesting as possible, which means it has colonized a significant portion of my mind.

In other words, I am thinking about it almost all the time.

The process reminds me of how constant and intense this thinking can be. While working on something like this newsletter may be absorbing for a short period as I’m wrestling through the piece, in my experience a book is a different animal. One of the reasons I like a short turnaround on even long manuscripts is because it becomes unpleasant (for me and others around me) to maintain that focus for an extended period.

When I say writing is thinking, I don’t mean it’s only happening when words are hitting the page, either. In a lot of ways I’ve been thinking about the material that will become this book since the release of ChatGPT last year, or even before that, which is why the actual writing of the manuscript will not take me all that long.

At the same time, the thinking is constantly being revised during the process. This stuff will follow you around unbidden and unwanted. Quite a few mornings I’ve woken up to the realization that some portion of my subconscious has been gnawing at an idea even as I was sleeping.

The iterative process of working on the book has also renewed my appreciation for how vital re-thinking is to understanding. The book started with a general notion, rooted in the newsletter I wrote a week after ChatGPT’s public release in which I declared that “ChatGPT Can’t Kill Anything Worth Preserving.”

At first, I thought this would the crux of the book, the argument that ChatGPT is incapable of achieving certain things, but the limits of that approach became apparent. As I worked on a proposal that would ultimately expand to almost 15,000 words (or about 1/5th of a book itself), I realized that I’d left the question begging as to what exactly is worth preserving when it comes to reading and writing, and why.

These are the questions around which the proposal is built, a proposal which resulted in acquisition by Basic Books and my editor, Emily Taber.

And now it comes time to write the book, and lo and behold, what happens? More questions appear, better questions, deeper questions that add facets to the book, and perhaps most importantly, keep me interested in the process.

Perhaps I can illustrate with a chapter I’ve been working on this week. In truth I’ve been working on four (okay, five) different chapters this week, each one covering a different aspect of the subject that I think belongs somewhere in the opening sections of the book, jumping from one to another as things occur. Working on one thread seems to suggest necessary additions to another.

As I say, writing is thinking.

So, one of the chapters in on the dichotomy that exists around AI, with some people arguing that it will unlock untold human flourishing, while others believe it may harbinger the extinction of mankind. As I point out in the draft chapter, some people hold these seemingly opposing views simultaneously. I’m interested in exploring what’s up with that, and also what’s up with the fact that I can’t seem to locate myself in either camp, nor even in the middle.

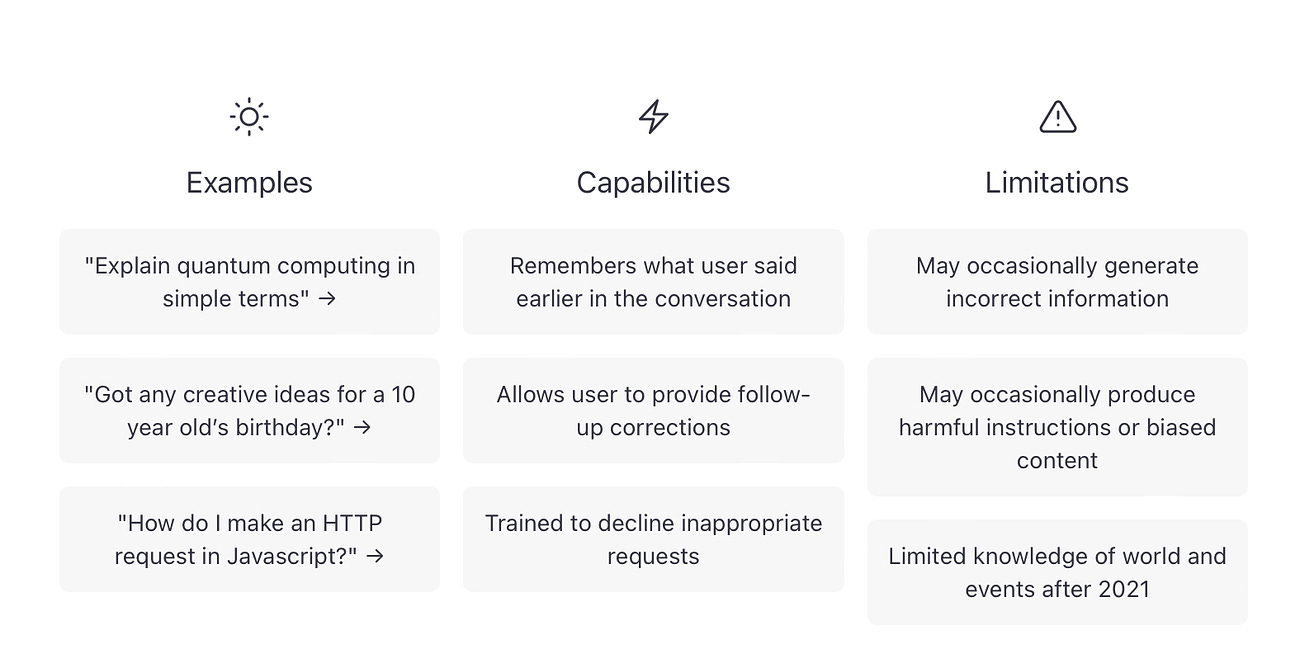

As is my method, I have a bunch of reading and sources that I’ve compiled that I think might be worth referencing during these explorations. One of them is a piece published by tech investor Marc Andreessen at his own site titled, “Why AI Will Save the World.”

I don’t want to pre-empt my own book by sharing everything I have to say, but as I was writing, I started pulling on a thread of thought that revealed connections I hadn’t previously considered.

One of the areas in which Andreessen believes AI will be a help is warfare. Aided by the AI, military leaders will be able to make “much better strategic and tactical decisions, minimizing risk, error, and unnecessary bloodshed.

I had read Andreessen’s piece in part or whole multiple times up to the moment I started to explore this notion in the chapter, but this week was the first time I flashed to Vietnam era Secretary of Defense Robert McNamara and his practice of “systems analysis he applied to Pentagon practices, predicting U.S. and Vietnamese casualties based on different scenarios, metrics which were supposed to tell them who was “winning” the war.

I did some Googling to fill in my memory, mostly gleaned previously from Errol Morris’s documentary on Robert McNamara (The Fog of War) and came across an interesting piece arguing that the chief legacy of McNamara’s approach was not the attempt to bring rationality to war, but instead to treat war as an “economic” rather than a “political” problem.

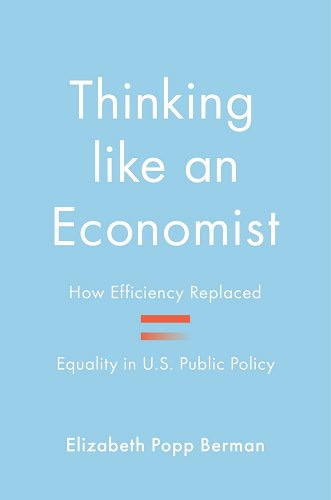

Internal bells rang as I realized that this too is Andreessen’s analytic framework. Increased speed, efficiency, productivity, these are the values by which he is defining “saving the world.” This is what Elizabeth Bopp Berman calls the “economic style of reasoning,” a style which has become dominant in the post-Cold War era of the country.

The economic style of reasoning is presented as value neutral, but as Dr. Bermann shows in her book, this is a myth, more than a bit of a con job. Of course there are values at work in the economic style of reasoning, values which just so happen to align with the interests of capital.

Many of you probably saw the ne plus ultra example of the economic style of reasoning in a viral clip of an Australian real estate mogul/financier arguing that laborers needed to experience additional unemployment in order to get the economy back on track, which to someone like him means low interest rates.

The man was roundly roasted as a ghoul, but the underlying value system is identical to Andreessen’s take on the future of AI, one where we are expected to be optimized to an algorithmic standard.

That unexpected connection to McNamara and economic reasoning has elevated the ultimate argument in the chapter, that this talk of utopia versus apocalypse is a dodge for the deeper conversations we should be having about living with this stuff. This is particularly important as the people who say that AI is going to issue in a utopia, make that utopian sound positively dystopian, as though we’ll be living like the humans in Wall-E glued to our mobile chaise lounges, fed a constant stream of entertainment.

Here’s where I have to admit that I can’t guarantee that any of that material will wind up in the book. Right now, I’m excited about it, but maybe I’m wrong. Maybe my editor will think I’ve gone too far afield or maybe, just maybe, I’ll think of something even better.

But the point I’m trying to make here is that all of this thinking matters even if it’s only experienced by me. One of the metrics I judge student success in developing as writers on is when they experience and can identify the sensation I describe above, the literal exploration of an idea rooted in their unique intelligence.

That’s the stuff we should be striving for when we talk about learning, IMHO.

Stoked by having conjured these connections during the week, this morning I tucked in for my weekly long Peloton endurance ride, a 60-minute jaunt with Christine D’Ericole and sent my brain wandering over what I’ve done this week trying to connect what ties them together, why this grouping of chapters feels related to me as the unit which will kickoff and frame the reader’s experience of my subject.

I realized I didn’t want to just argue as to what is worth preserving in an AI world, but I also wanted to explore why it already seems so hard to preserve those things.

The word, “alienation” popped into my head. As I pedaled, I rolled this around, wondering why, and after awhile, mid ride, I picked up my phone and started typing into the notes app: The emotions we have experienced since the arrival of ChatGPT and being confronted with a future in which true artificial intelligence seems not just possible, but perhaps likely, even imminent - the confusion, the excitement, the fear - are all built on the same foundation: a profound sense of alienation.

I don’t know if I even fully believe this yet, but I’m interested in figuring out if it’s true.

Writing is thinking.

Links

At the Chicago Tribune this week I extoll the virtues of John McPhee, on the occasion of the release of his book of fragments, Tabula Rasa. There’s probably no better example of writing as thinking than the work of John McPhee, and fortunately for us, he’s provided us with artifacts which demonstrate how he thinks in both Tabula Rasa, and his earlier book on his writing method, Draft No. 4. If I were teaching a longform nonfiction writing class, I’d assign those books plus one of McPhee’s fully-realized narratives like Oranges and try to get students to just do what he’s doing. (Easier said than done, but worth doing, for sure.

The Booker Prize has released its short list of nominees, including Paul Murray’s The Bee Sting, which I’m in the midst of right now and is just terrific.

A bunch of big name authors under the umbrella of the Authors Guild have sued OpenAI for copyright infringement. I think these issues are contemplated, but I also think lawsuits are a necessary way to bring more transparency to what these companies are up to.

LitHub runs down this fall’s 38 literary movies and TV shows to watch this fall, and reminds us to support the WGA in its strike in order to protect the labor of the people who make these stories possible.

From my friends at McSweeney’s, in honor of the official start of fall, “It’s Decorative Gourds Season…”

Recommendations

Another week where I’ve used up all my requests on my Trip column, so if you act quickly and get to the top of the queue, you will get nearly immediate response.

Book Giveaway

Still can’t get anyone to answer my emails for the books I promised to give away August 27th and August 20th. Maybe rather than drawing from paid subscribers and sending an email that seems to be getting ignored or blocked as spam, if people want free books, they can just say something like “I want free books” in the comments and I do a drawing from the volunteers. Should we try that?

Take care folks. I’m off to do some more thinking.

JW

The Biblioracle

Hopefully it’s clear that “idea” is a stand in for something bigger than simply ideas. If we’re talking about fiction or poetry we may be trying to capture something more inchoate and harder to define, but which is nonetheless entirely recognizable once we’re in its presence. If you want to call that “art” I’m not going to stop you.

Free books are excellent, but oh my gosh, I do not need more books! I went to the library yesterday to pick up three holds that came in, and now have seven books checked out, not to mention piles lying around, and the Hyde Park-Kenwood book sale is in a couple of weeks. I'm doomed, I think.

I’m very excited for this new book! If you’re delving into the connections between war and technology and AI, I really recommend looking at the history of cybernetics (and thinking about why the AI people broke with the cybernetics folks, eg Norbert Weiner). The Cybernetics Moment by Ronald Kline is pretty good. There are also some interesting figures in and around early computing who asked good questions about the hype (Weizenbaum, Dreyfuss.) I’m sure you know all this. I wanted to talk more in Teaching Machines about the cybernetics stuff, but it felt a little tangential. :)